AI models such as Chat GPT, Claude and Gemini require enormous resources to be trained and to operate. Wallenberg Scholar Dejan Kostic thinks it is a democracy problem that only the largest businesses can afford to develop the models. The solution may be an entirely new AI platform.

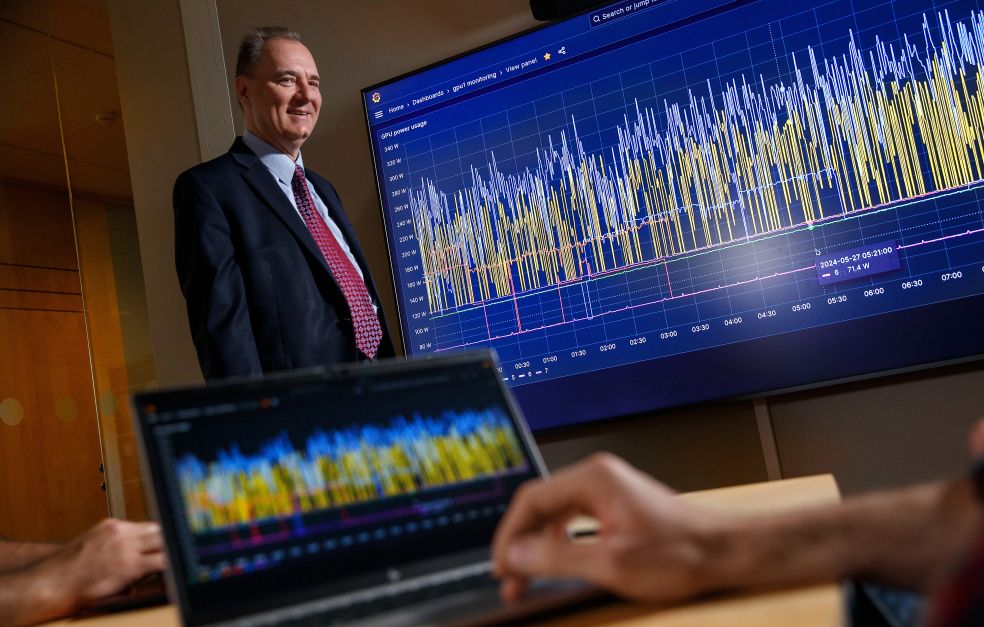

Dejan Kostic

Professor of Internetworking

Wallenberg Scholar

Institution:

KTH Royal Institute of Technology

Research field:

Internet technology: a broad field encompassing network systems, such as energy-proportional network systems, reliable software-defined networks and virtualization of network functions.

A Chat GPT for every child – that’s how Kostic describes his goal as a Wallenberg Scholar. Simply put, this means creating a new platform simply and cheaply enough to give every child their own AI mentor.

“Even now many people across the world use AI tools – but by no means everyone. My vision is that every child on the planet should have access to their own AI mentor to help them realize their potential,” Kostic says.

He thinks it will be difficult to achieve this using current technology. For instance, training a large language model like Chat GPT requires access to computer servers containing thousands of traditional processors and graphics processors (GPUs). Only specialized GPUs can manage the demanding mathematical computations that are common in the world of AI.

Once a language model has been fully trained, access to similar computational power is needed so it can answer questions and solve problems. In the AI field this process is known as inference, i.e., a trained machine learning model can make inferences from new data.

All this involves huge investment in data centers, which consume enormous quantities of energy, and in many cases cause higher carbon dioxide emissions.

“The company behind Chat GPT has not made the cost of operating the model public. But a conservative estimate has been made, arriving at a figure of about a quarter of a billion dollars each year. This means that only the largest enterprises can afford to develop and operate these large models.”

Impending democracy problem

No one is likely to have missed the AI booms of recent years. But as yet, no one has managed to achieve the holy grail of AI: artificial intelligence of a more general nature. This means AI on a par with the way human beings absorb and process information. In Kostic’s view, anyone who succeeds in this aim will gain such a major advantage that it will constitute a democracy problem.

“What will happen to the rest of us who don’t have access to this new form of AI?”

To avoid the risk of only a select few deriving benefit from new AI models, Kostic wants to develop a new platform from which to operate language models. The platform is intended to allow parallel implementation of multiple smaller and specialized models capable of cooperating with each other. Smaller models do not require as many resources to operate, and have the potential to provide better answers than the larger, more general AI models.

This is my first major project in the AI field, and I am extremely grateful for the support I have received from Knut och Alice Wallenberg Foundation.

“Hopefully, if we manage to get these smaller expert models to work together on a new kind of platform, we will be able to match the language model used by large companies,” Kostic says.

The platform needs to be adaptable, and to cope with the addition and removal of new language models during operation, depending on the problems it needs to solve. It must also be possible to operate it using simpler technology than that currently used

Lower energy requirement

A key part of the project is to reduce the amount of energy needed to operate an AI model.

“We aim to reduce energy consumption to one-tenth of current levels. This is naturally extremely hard, but setting a goal that is ostensibly unachievable is highly motivating.”

Kostic has succeeded before with similar ambitions. In one project funded under the EU ERC framework program, he managed to achieve a tenfold increase in the performance of traditional internet servers. The project was carried out jointly with Ericsson and WASP (Wallenberg Autonomous Systems and Software Program), among others.

Current AI systems require computers in which four graphic processors are clustered together with a traditional processor. While the graphic processors perform the demanding computations, the traditional processors take care of communication. There are many advantages to be gained in terms of energy consumption and speed if the graphic processors could instead communicate directly with each other.

“This is already possible when training the models, but not during inference. But we have already published some research showing how this could be done.”

Much faster

If the traditional processors can be eliminated, the energy requirement may fall by one quarter. But the main advantage is in the increased speed. The challenge is to get the communication to flow at the right rate, however.

“Without the traditional processors, there is no natural buffer, which is needed to enable the computations to flow. So we need to create a little magic if we are to succeed.”

Rapid developments in the field of AI mean that competition is intense. But this is not something that worries Kostic – on the contrary:

“The end product of our work is usually software, which we release with an open source code, so that other researchers and businesses can use it. And there is currently also a discussion among companies about sharing their results with each other to a greater extent,” Kostic adds.

Text Magnus Trogen Pahlén

Translation Maxwell Arding

Photo Magnus Bergström